Bringing OFA (Once-for-All) to FPGA

Introduction

Deep learning (DL) models need to be deployed on diverse hardware platforms, ranging from cloud servers with trillions of FLOPs/s to mobile phones and micro-controllers that have orders of magnitude lower computation and memory. To achieve the best performances, it requires many deep learning experts to carefully tune the architecture of the DL model for each hardware and efficiency constraint. Meanwhile, it also requires vast computational resources to train these DL models, causing excessive CO2 emissions.

Once-for-All (OFA) is an efficient AutoML technique to reduce the engineering cost and training cost of deep learning. Unlike previous AutoML approaches that require repeating the architecture search process and retraining the searched architecture from scratch for each case, OFA decouples training from search. It trains a single OFA network from which we can grab many different sub-networks without training. Therefore, getting a new specialized neural network for a given hardware platform and efficiency constraint with the OFA network is highly efficient, incurring little computation cost.

“OFA is a very effective AutoML technique that can quickly produce specialized, compact and efficient neural network architectures on Xilinx FPGAs. OFA consistently outperforms hand-designed models and existing industry standards” says Lu Tian, director of AI algorithms at Xilinx.

Advantages of Using OFA

Specialized neural network: OFA provides specialized neural networks tailored to the target hardware and target efficiency.

Low cost: searching for a new specialized neural network with OFA does not require any training cost. It can be done in seconds using a single GPU.

Strong performance: state-of-the-art accuracy on ImageNet under the mobile setting (80% with only 600M MACs). First place in the 5th Low-Power Computer Vision Challenge (CPU track & FPGA track), the 4th Low-Power Computer Vision Challenge (CPU classification track and detection track), and the 3rd Low-Power Computer Vision Challenge (DSP track).

How does OFA work?

OFA consists of the training phase and the deployment phase. The training phase is done only once, while the deployment phase can repeat many times with little cost.

Training Phase

In the training phase, the goal is to train an OFA network that flexibly supports different depths, widths, kernel sizes, and resolutions without retraining. The optimization objective is to improve the accuracy of all sub-networks derived by selecting different parts of the OFA network. The key challenge is that we need to support a large number (more than 10^9) of sub-networks that interfere with each other when training the OFA network.

To address this challenge, we introduce a progressive shrinking algorithm for training OFA networks. It starts with training the full network with maximum depth, width, and kernel size. Then it is finetuned to support smaller sub-networks progressively. It can be viewed as a generalized version of network pruning that shrinks not only the width dimension but also the depth, kernel size, and resolution dimensions of the full model. Additionally, it fine-tunes both large and small sub-networks rather than a single pruned network.

Deployment Phase

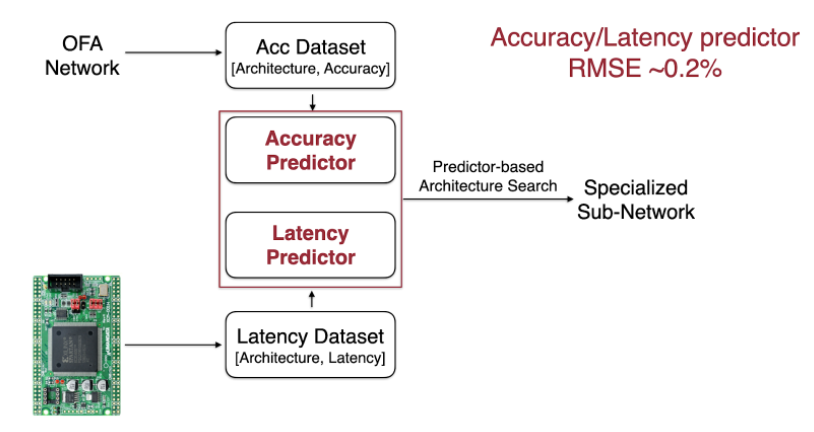

The goal of the deployment phase is to derive the specialized sub-network from the trained OFA network for a given hardware and efficiency constraint. The key components of very fast neural network deployment are accuracy predictors and efficiency predictors. The accuracy predictor predicts the top1 accuracy of a given sub-network so that we do not need to run very costly inference on ImageNet while searching for specialized models. Such an accuracy predictor is trained using an accuracy dataset built with the OFA network.

The intuition of having efficiency predictors, especially the latency predictor, is that measuring the latency of a sub-network on the fly is also costly. The latency predictor is designed to eliminate this cost. For FPGA, a simple latency lookup table that maps each candidate operation to its estimated latency already provides very accurate latency prediction.

Based on the accuracy predictor and the latency predictor, an evolutionary search is conducted to get a specialized sub-network for the given target hardware and latency constraint. A tutorial about this phase is available at OFA@Colab.

Apply OFA to FPGA

Building Latency Lookup Table

A latency lookup table is necessary for OFA to predict the latency for a specific device. The latency predictor works based on the fact that most hardware platforms, including Xilinx DPU, run the network layer by layer. Thus if we get the latency for each possible configuration of each layer, the latency of the network can be calculated by simply adding them up. OFA utilizes the latency lookup table to search for a model specialized for the platform. When OFA finds a specialized network for FPGA, we quantize it using the quantization tools provided as part of Vitis AI.

Improving the Utilization

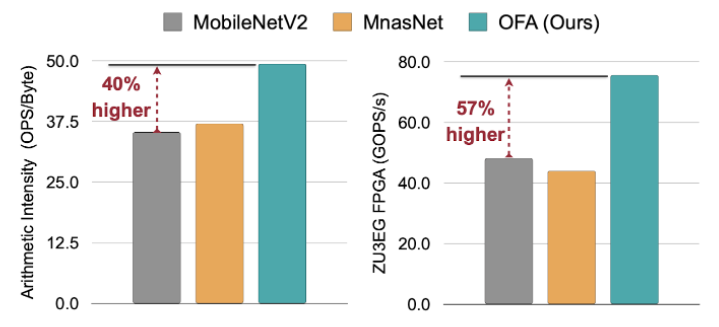

Memory access is expensive, while computation is cheap. An efficient CNN for FPGA should do as much computation with a small amount of memory footprint. The ratio is defined as the arithmetic intensity (OPs/Byte). The higher OPs/Byte, the less memory bounded, the easier it is to parallelize. OFA's arithmetic intensity is 40% higher than MobileNetV2, resulting in higher utilization and GOPS/s by 57%.

Improving the Accuracy Given Latency Constraint

Higher utilization leads to better accuracy or better efficiency on FPGA. With the same latency, OFA provides up to 7.9% top1 accuracy improvement on ImageNet than MobileNetV2. Maintaining a similar ImageNet accuracy, OFA provides 1.5x speedup on FPGA than MobileNetV2.

The Future

We believe OFA is a new paradigm that decouples model training from neural architecture search. It paves the way to accelerate many on-device AI applications and specialize them across many devices. We will investigate how to further improve performance on other tasks and specialized hardware platforms using OFA networks. There is also an opportunity for algorithm and hardware co-design, which opens up a much larger design space.