LIDAR Pulse Detection Accelerator Model Based Design Targeting Vitis and RFSoC

Abstract

LIDAR is an exciting growing market for automotive and is very important in mapping and other forms of metrology. High-speed LIDAR requires the processing of multiple giga samples per second of laser pulse return data. This is an excellent target for a Vitis™ unified software platform. Data scientists may wish to iterate on pulse detection algorithms in a high-level tool such as the MathWorks Simulink model-based design environment in order to optimize them for sub-sample accuracy and clutter removal. While the underlying logic of acquiring the laser pulses, sending these to the pulse detection accelerator and then taking detected pulses from the accelerator and providing them to a processor running Linux for further processing is a relatively static function and an excellent target to be encapsulated in a Vitis software platform.

Introduction

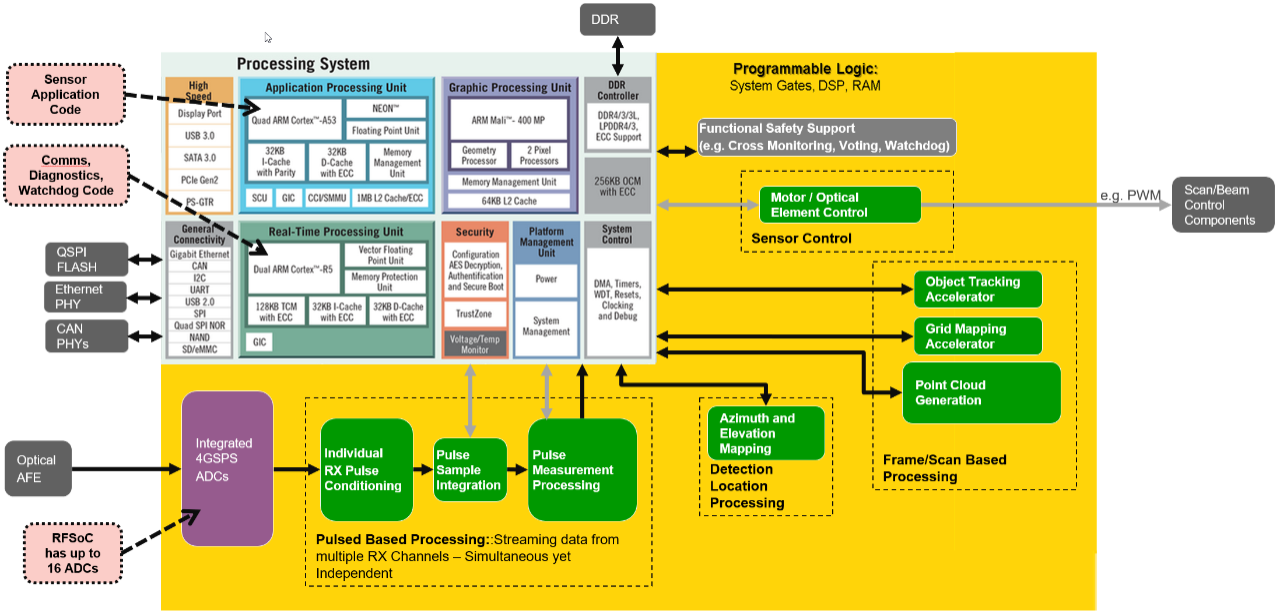

Highly integrated LIDAR systems can be implemented with RFSoC. RFSoC includes on chip ADCs for the capturing of the analog LIDAR pulse return signals, programmable logic for the acceleration of pulse processing and a complete SoC processor to tie it all together. Figure 1 below shows a block diagram of such a system.

To show an example of how such a system might be developed, we put together an example system based on Vitis technology for development. It targets the ZCU111, a development board for the RFSoC with integrated 4GSPS ADCs. A basic Vivado designed target platform for the programmable logic has been created that instances one integrated 4GSPS ADC, driving the Vitis tool accelerator which then drives a buffer memory. The design also includes a DAC instance and DAC accelerator that may be used to provide simulated LIDAR return pulses for development & debug. Figure 2 shows a block diagram of our Vitis tool system design.

The part of the Vitis software platform design in the Programable Logic (PL) requires traditional FPGA design skills to implement. This includes designing with IP Integrator (IPI) and HDL. This part of the overall system design can be relatively static. The raw data rate coming from the integrated ADCs in the RFSoC is fixed by system level requirements. The path to communicate detected and measured LIDAR return pulses can also be static. But the algorithms to preprocess, detect and measure the position of LIDAR return pulses may require extensive algorithm development and testing iterations. These algorithms must also process huge amounts of raw data, 8 LIDAR pulse receivers can produce 64GB/sec of raw data. The processing in the accelerator must funnel this down to a much smaller dataset consisting of the measured points.

To develop the acceleration kernel, we chose MathWorks’ Simulink with the Xilinx System Generator Super Sample Rate (SSR) blockset. Simulink is a graphical simulation and design environment that we can use to rapidly test and debug our accelerator design. The SSR blockset processes multiple samples per clock cycle and is optimized to clock the PL at high frequencies. With a PL clock frequency of 500MHz and a super sample rate of 8x, we can process 4GSPS of data from the ADC. Multiple such processing pipelines can be instanced to process the data from multiple ADCs.

Vitis technology ties it all together. The Vitis software platform consists of the Vivado target platform design in the PL and the Linux environment on the processing subsystem. Acceleration kernels designed in Simulink, other accelerators, application software, and the platform are integrated together by the linking process in the Vitis tool to create a complete programing of the RFSoC.

Simulink Design of LIDAR Pulse Detection Accelerators

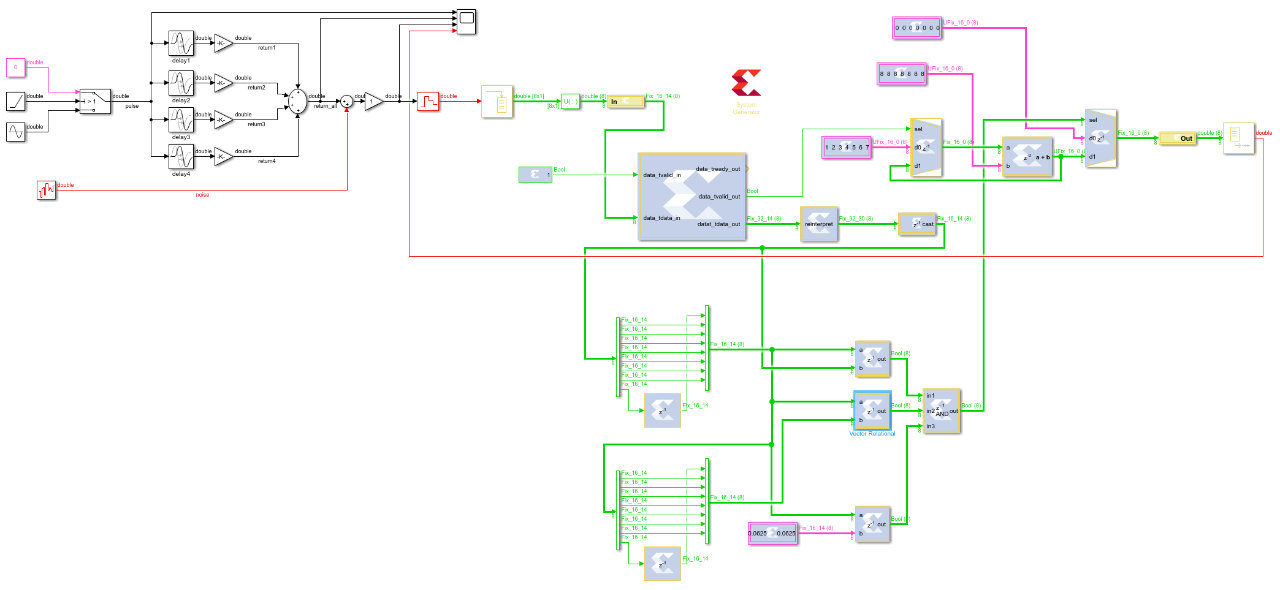

Figure 3 below shows our full Simulink canvas.

The white blocks on the left are our model of the LIDAR return pulses and the ADC. They are built out of native Simulink blocks. The gray blocks on the right are Xilinx System Generator SSR blocks that make up our accelerator design. The output of our pulse model is fed into our accelerator design. Finally, on our Simulink canvas we feed the output of the accelerator back to a Simulink scope, this lets us observe a number of points in our model and the accelerator output.

Figure 4 shows the model we created for the LIDAR pulse and the ADC. On the Left we start with a switch block that selects between a sine wave with a DC offset and a constant zero after the first cycle, this produces a single raised cosine wave at the beginning of simulation time. This model a pulse from the laser light source. This pulse is fed to 4 parallel delays that model the time of flight for each return signal. Next, each of the four return signals has a gain stage to model the attenuation of the return pulse. In these blocks, MATLAB variables are used to set the width of the original pulse, and the delay and attenuation of each return pulse. With these MATLAB variables, different scenarios can quickly be tested. Next, the 4 return signals are summed together, noise is added in and a gain stage is included. Finally, the RFSoC integrated ADC is modeled with 3 more blocks. The first converts the continuous signal to a discretely sampled signal at 4GSPS, the second buffers the data to produce 8 samples in parallel at a slower clock rate of 500MHz, the last block reorganizes the data from a column vector to a row vector which is the format we need to go into our SSR accelerator.

The block in the upper right with 4 inputs is scope, it gives you the ability to observe any signal. Figure 5 below shows the single raised cosine pulse, the 4 delayed and attenuated return pulses, the return pulses with noise added and the output of the accelerator. The accelerator output is nonzero when a pulse is detected and the value it outputs is the measured time of flight for the pulse. The accelerator output is delayed relative to the model signals due to the processing pipeline delay of the accelerator which is also modeled by Simulink.

Our example accelerator from the Simulink canvas is shown below in figure 6. It is composed of blocks from the Xilinx System Generator blocks. The blocks with double line borders are SSR (Super Sample Rate), this means that on every clock cycle of the PL (Programmable Logic) they process multiple samples. In our case to process data at the 4GSPS rate of our ADC, these blocks process 8 samples every 500MHz PL clock cycle.

The yellow in the block on the left side and the Out block on the right side of the accelerator are gateway blocks. They mark the boundary between the Simulink modeling, test and observation blocks and the System Generator blocks that compose the accelerator. The thick wires in the accelerator represent the SSR data paths, each will actually be 8 parallel datapaths in the PL hardware clocked at the 500MHz rate.

The first large block after the yellow In block is a FIR filter. It has been programmed to perform a cross-correlation of the input data with a raised cosine. This searches for incoming energy that is shaped like a LIDAR return pulse. The correlation result from the FIR is sent through delays so that three adjacent samples are available for processing. Because every clock is 8 samples, to get a one sample delay we barrel shift 7 of the samples, and delay the 8th sample that falls off the end by 1 clock to produce a new SSR data path that has the data delayed by one ADC sample.

The middle correlation result of the three is compared against a threshold. Noise on the incoming signal will make small correlation peaks, we want to reject these by only paying attention to peaks that are larger than our threshold. Things like the threshold level and the FIR filter correlation values could be controlled by software from the PS, but in our simple example, we chose to hardwire them. In parallel with the threshold, the three adjacent correlation result values are compared to determine if the center one is a local maximum. If the center is a local maximum and above threshold, then we output the time of flight counter, otherwise, we output zeros.

Our simple example calculates the time of flight to the precision of one ADC sample. This gives us a distance measurement of approximately 4 cm. If we wanted more accuracy we could add curve fitting or interpolation to our accelerator. This would more accurately measure the position in time of the return pulse.

We use the Simulink environment to simulate the operation of our accelerator. See figure 5 above for the scope output showing the simulated LIDAR return pulses entering the accelerator simulation and the result pulse detections represented by the time of flight measurements on the output of the accelerator.

The block in the top center of the accelerator with the Xilinx logo is the System Generator block. It is used to set the clock rate and other options that affect the operation of the accelerator. Once we are happy with the operation of our accelerator in Simulink, then we can move on to generating it. This is done by opening the system generator block, going to the compilation tab, selecting HDL Netlist compilation, picking a target directory and clicking on generate.

The generation produces a design hierarchy for the accelerator in the target directory that we specified. This includes a top-level Verilog HDL wrapper that you can see in appendix A below. In a later step, we will connect this HDL into a Vitis RTL Kernel so that we can use it as an accelerator in our system.

Vitis PL Target Platform for Accelerators – Designed in Vivado

We just designed an accelerator for our LIDAR pulses, but we need a place to put that accelerator. This requires a target platform design for the PL of our RFSoC on the ZCU111 board. Xilinx Alveo cards have pre-built platforms that are available for you to use. But in our case there are not yet any prebuilt platform designs for the RFSoC, so we built one. To create a target platform you will need HDL and FPGA design skills. IP Integrator (IPI) in Vivado is used to capture the target platform design in the PL. HDL components under the IPI design may also be required. John Mcdougall on our team, an expert FPGA and embedded designer, created our target platform and makefile compilation and linking flow.

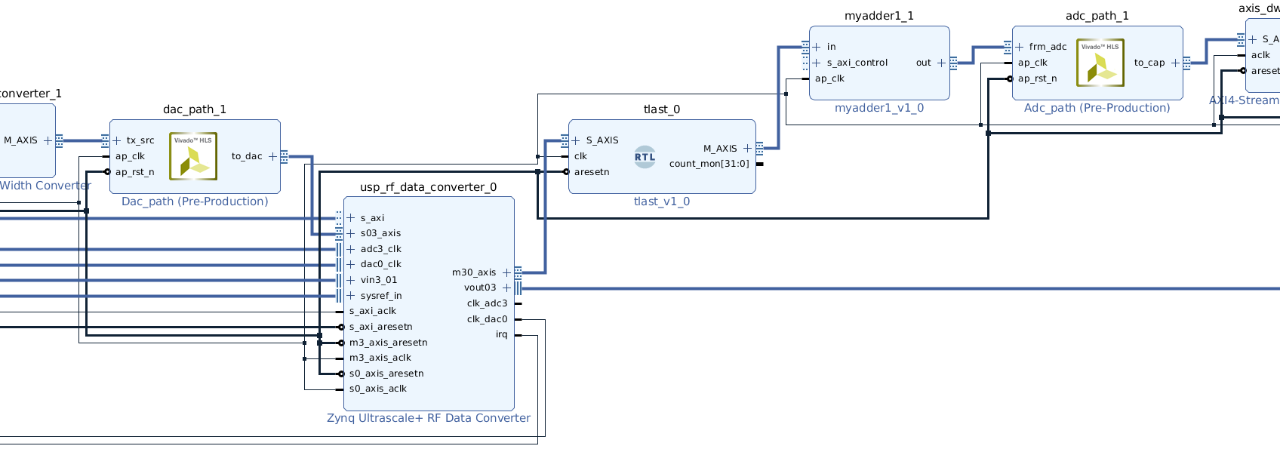

Figure 8 below shows an IPI block diagram of our completed system project that includes the target platform and the acceleration kernels in place. Our target platform is all of this except for the two accelerator parts. You can see that within the diagram are blocks that have a 1:1 association with the blocks in figure 2 - Example system design on RFSoC board.

The target platform design provides input and output ports for the accelerators but does not include the accelerators themselves. The accelerators get connected to the target platform later with the Vitis tool linking process. In our case, our accelerators each have 128 bit AXI-Stream inputs and outputs. Because the input to the ADC accelerator comes from the ADC which can’t take backpressure we operate the accelerator in a continuous manner without any stalls. Similarly, the DAC can’t accept any stalls on its input so the DAC accelerator also does not stall.

We used a makefile to build the target platform design and compose the complete Vitus platform to put our accelerator in. See Appendix B for this makefile that builds the platform.

Making our System Generator output into a Vitis Acceleration Kernel

Previously we showed how we generate an accelerator design from System Generator in Simulink. But we need to make Vitis technology understand that what we generated is an accelerator or kernel that it can use. To do this we use the RTL Kernel Wizard. This is available from the Vitis GUI, or Vivado. We used this wizard to create a prototype accelerator with the right kinds of ports that we need. Then we edited the HDL generated for the prototype to connect to our System Generator generated code. This process only needs to be done once. Unless we change the interfaces on our accelerator, we can modify our accelerator in System Generator as much as we wish without needing to repeat this step.

From Vivado the steps with the RTL Kernel Wizard are as follows:

- Create an empty Vivado project with the desired part or board, ZCU111 in our case

- Create an IP instance of IP “rtl_kernel_wizard” with the customization you need

- Right-click on the IP instance -> Open IP Example Design… -> IP example project comes out

- Once the IP example project is up, you can hook your RTL design with the top-level Verilog file

For step 2, on page 2 of the wizard, we changed the Kernel control interface to “ap ctrl none” because we did not need a control interface. And on page 5 of the wizard, we added our 128-bit wide streaming input and 128 bit wide streaming output. See figure 8 below for screenshots of these wizard pages.

Per step 4 above, to connect the System Generator output to this prototype Vitis Kernel, we edited the HDL generated by the wizard. See Appendix C for our simple Verilog code that instances the System Generator top level shown in Appendix A.

The additional source files that we edited and the ones generated by System Generator are added to the Kernel with a file, additional_sources.tcl shown here:

#Source files excluding top level

# Do not change the instance name within this file because the top level files

# instantiate this instance.

import_files -quiet -fileset sources_1 ./src/myadder1_example.sv

#######################################################################

# Add files that need to be added to project here

import_files -fileset sources_1 ../src_lidar/imports

import_files -norecurse -fileset sources_1 ../src_lidar/ip/lidar_final_c_addsub_v12_0_i0/lidar_final_c_addsub_v12_0_i0.xci

import_files -norecurse -fileset sources_1 ../src_lidar/ip/lidar_final_fir_compiler_v7_2_i0/lidar_final_fir_compiler_v7_2_i0.xci

Putting it all Together with Vitis

We used a second makefile to compile our acceleration kernel and link the accelerators into the design. See Appendix D for this makefile that produces the complete design.

The make file includes targets for 3 Kernels. Our RTL kernel, a DAC path kernel, and an ADC path kernel. The DAC and ADC path kernels are just pass-through place holders. The linking stage in the makefile connects the 3 accelerators with the target platform to create the complete design. The following line from the make file is the linking step.

$(VPP) $(VPP_OPTS) -l --config ./myadder.ini --jobs 20 --temp_dir $(BUILD_DIR) -o'$@' $(BINARY_CONTAINER_myadder_OBJS)

The myadder.ini file that is referenced is where we capture the connectivity between the accelerators and the target platform. Here is our myadder.ini file.

[connectivity]

# --connectivity.stream_connect arg <compute_unit_name>.<kernel_interface_name>:<compute_unit_name>.<kernel_interface_name>

# Specify AXI stream connection between two compute units

stream_connect = play_m_axis:dac_path_1.tx_src

stream_connect = dac_path_1.to_dac:dac_s_axis

stream_connect = adc_m_axis:myadder1_1.in

stream_connect = myadder1_1.out:adc_path_1.frm_adc

stream_connect = adc_path_1.to_cap:adc_cap_s_axis

# --connectivity.nk arg <kernel_name:number(:compute_unit_name1.compute_unit_name2...)>

# Set the number of compute units per kernel name. Default is

# one compute unit per kernel. Optional compute unit names can be specified using

# a period delimiter

nk = dac_path:1:dac_path_1

nk = adc_path:1:adc_path_1

On the DAC side, we have connected the target platform port named play_m_axis to the input port of our pass-through DAC path kernel. The output of this kernel then feeds the target platform input to the DAC at 4GSPS. This connectivity facilitates the 128K looping buffer being played out continuously to the DAC for a test signal. The software could be used to load test LIDAR return data into the DAC for testing.

On the ADC side, the 4GSPS target platform output of the ADC is connected to the input of our System generator acceleration kernel. The output of our kernel is connected to the pass-through DAC path kernel, and the output of that is connected to the capture buffer input in the target platform. By connecting our kernel through to the capture buffer, the software can access the lidar pulse measurement results of our accelerator. Figure 9 below shows the connectivity of the kernels and the target platform as seen in an IPI examination of the completed design.

The dac_path_1 block is our pass through DAC path kernel, myadder1_1 block is our kernel we created with System generator and adc_path_1 is our pass through ADC path kernel. The Vivado synthesized schematic view of our kernel from system generator can be seen in Appendix E.

In addition to storing the pulse measurement data in the capture buffer in the target platform, our design presently also stores all the zero data where no pulse was detected. A future enhancement could make it so that only the measurements of the detected pulses were stored, there by greatly reducing the volume of data for software to process. Such an enhancement could be done in our System Generator kernel, in the ADC pass through kernel, or in the target platform design.

Rapid Design Iterations of Pulse Detect and Measurement Accelerator

The point of this example is to show how the Vitis tool can be used to enable rapid development and algorithm exploration for our LIDAR pulse detection and measurement accelerator. Once we had our first pass System Generator designed accelerator, the accelerator connected in to a Vitis kernel, our target platform and the makefiles to put it all together, iteration is very easy.

- Go to the Simulink canvas and modify the System Generator accelerator design and the Simulink stimulus and test bench

- Rapidly test in Simulink, iterate 1 & 2 until desired results are achieved

- Double click the System Generator block on the Simulink canvas, then click the generate button on the window that comes up

- In the rtl_kernel directory type

make cleanfollowed bymake - Go test the built design in hardware, iterate if needed by going back to step 1

That’s it. You can see that there is zero HDL or FPGA design expertise required to run through this iteration loop. Perfect for data scientists, optical engineers and systems engineers who have the big picture and the algorithm knowledge.

Appendix A – Verilog Code: Generated System Generator Top Level from lidar_final.v

`timescale 1 ns / 10 ps

// Generated from Simulink block

(* core_generation_info = "lidar_final,sysgen_core_2019_1,{,compilation=Synthesized Checkpoint,block_icon_display=Default,family=zynquplusRFSOC,part=xczu28dr,speed=-2-e,package=ffvg1517,synthesis_language=verilog,hdl_library=xil_defaultlib,synthesis_strategy=Vivado Synthesis Defaults,implementation_strategy=Vivado Implementation Defaults,testbench=0,interface_doc=0,ce_clr=0,clock_period=2,system_simulink_period=2e-09,waveform_viewer=0,axilite_interface=0,ip_catalog_plugin=0,hwcosim_burst_mode=0,simulation_time=1e-06,addsub=8,constant=33,convert=8,delay=2,fir_compiler_v7_2=1,logical=8,mux=16,reinterpret=8,relational=24,}" *)

module lidar_final (

input [16-1:0] gatewayin0,

input [16-1:0] gatewayin1,

input [16-1:0] gatewayin2,

input [16-1:0] gatewayin3,

input [16-1:0] gatewayin4,

input [16-1:0] gatewayin5,

input [16-1:0] gatewayin6,

input [16-1:0] gatewayin7,

input clk,

output [16-1:0] gatewayout0,

output [16-1:0] gatewayout1,

output [16-1:0] gatewayout2,

output [16-1:0] gatewayout3,

output [16-1:0] gatewayout4,

output [16-1:0] gatewayout5,

output [16-1:0] gatewayout6,

output [16-1:0] gatewayout7

);

wire clk_1_net;

wire ce_1_net;

lidar_final_default_clock_driver lidar_final_default_clock_driver (

.lidar_final_sysclk(clk),

.lidar_final_sysce(1'b1),

.lidar_final_sysclr(1'b0),

.lidar_final_clk1(clk_1_net),

.lidar_final_ce1(ce_1_net)

);

lidar_final_struct lidar_final_struct (

.gatewayin0(gatewayin0),

.gatewayin1(gatewayin1),

.gatewayin2(gatewayin2),

.gatewayin3(gatewayin3),

.gatewayin4(gatewayin4),

.gatewayin5(gatewayin5),

.gatewayin6(gatewayin6),

.gatewayin7(gatewayin7),

.clk_1(clk_1_net),

.ce_1(ce_1_net),

.gatewayout0(gatewayout0),

.gatewayout1(gatewayout1),

.gatewayout2(gatewayout2),

.gatewayout3(gatewayout3),

.gatewayout4(gatewayout4),

.gatewayout5(gatewayout5),

.gatewayout6(gatewayout6),

.gatewayout7(gatewayout7)

);

Endmodule

Appendix B – Makefile: Platform Creation

#################################################################################

# Platform creation makefile

#

# Notes:

# Switch between pre-built or petalinux-build

#set TRUE or leave blank to switch between pre-built vs local build of petalinux

USE_PLNX_PREBUILT ?=

# set global variables

export PLATFORM = zcu111_axis256

export OUT_DIR = ${CURDIR}/output

export PLNX_BOOT_BIT_IMAGE = ${CURDIR}/../app_build/binary_container_1/link/vivado/vpl/prj/prj.runs/impl_1/design_1_wrapper.bit

.PHONY: plnx_bootimage plnx_prebuilt_sync plnx_build_sync bootimage clean_vivado clean_sftwr clean_plnx clean_pfm clean

all: $(PLATFORM)

sftwr: $(OUT_DIR)/temp/sw_components/image/image.ub

#################################################################################

# Create and build Vivado project

#

vivado: ${OUT_DIR}/temp/xsa/${PLATFORM}.xsa

${OUT_DIR}/temp/xsa/${PLATFORM}.xsa:

echo "Starting Vivado build"

$(MAKE) -C vivado

#################################################################################

# Petalinux misc targets

# build BOOT.BIN in petalinux image dir using the .bit from the app

plnx_bootimage:

$(MAKE) -C petalinux bootimage

#################################################################################

# Either copy pre-built images to output temp dir or

# build petalinux

plnx_prebuilt_sync:

$(MAKE) -C petalinux_pre-built

plnx_build_sync:

$(MAKE) -C petalinux sync_out

plnx_build: $(OUT_DIR)/temp/sw_components/image/image.ub

$(OUT_DIR)/temp/sw_components/image/image.ub: vivado

ifdef USE_PLNX_PREBUILT

@echo '************ Using sftwr pre-built ************'

$(MAKE) -C petalinux_pre-built sync_out

else

@echo '************ Petalinux Build ************'

$(MAKE) -C petalinux refresh-hw

$(MAKE) -C petalinux linux

$(MAKE) -C petalinux sdk

$(MAKE) -C petalinux sync_out

endif

#################################################################################

# Build Platform

#

$(PLATFORM): vivado ./scripts/gen_pfm.tcl $(OUT_DIR)/temp/sw_components/image/image.ub $(OUT_DIR)/temp/sw_components/boot/ sysroot

@echo '************ Generating Platform ************'

xsct -sdx ./scripts/gen_pfm.tcl ${PLATFORM} ${OUT_DIR}

${RM} -r $(OUT_DIR)/repo/$(PLATFORM)

mkdir -p $(OUT_DIR)/repo

mv ${OUT_DIR}/temp/$(PLATFORM)/export/$(PLATFORM) $(OUT_DIR)/repo/$(PLATFORM)

cp $(OUT_DIR)/temp/sw_components/boot/* $(OUT_DIR)/repo/$(PLATFORM)/sw/$(PLATFORM)/boot

#@echo '************ Generating sysroot ************'

#$(OUT_DIR)/temp/sw_components/sdk.sh -y -d $(OUT_DIR)/repo/$(PLATFORM)/sysroot

sysroot: $(OUT_DIR)/repo/$(PLATFORM)/sysroot

$(OUT_DIR)/repo/$(PLATFORM)/sysroot: $(OUT_DIR)/temp/sw_components/sdk.sh

@echo '************ Generating sysroot ************'

mkdir -p $(OUT_DIR)/repo

$(OUT_DIR)/temp/sw_components/sdk.sh -y -d $(OUT_DIR)/repo/$(PLATFORM)/sysroot

#################################################################################

# Create boot.bin using accel app

#

# Note: result BOOT.BIN is in plnx image/linux dir

bootimage:

echo "*************** Creating BOOT.BIN in plnx image/linux **************"

$(MAKE) -C petalinux bootimage

#################################################################################

# Clean

#

clean_vivado:

$(MAKE) -C vivado clean

clean_sftwr:

${RM} -r $(OUT_DIR)/temp/sw_components

clean_plnx:

$(MAKE) -C petalinux ultraclean

clean_pfm:

${RM} -r ${OUT_DIR}/temp/$(PLATFORM)

${RM} -r ${OUT_DIR}/repo

clean:

${RM} -r ${OUT_DIR}

ultraclean: clean clean_plnx

Appendix C – System Verilog Code: RTL Kernel Wizard HDL Edited to Instance System Generator Output

////////////////////////////////////////////////////////////////////////////////

module myadder1_example #(

parameter integer C_OUT_TDATA_WIDTH = 128,

parameter integer C_IN_TDATA_WIDTH = 128

)

(

// System Signals

input wire ap_clk ,

input wire ap_rst_n ,

// Pipe (AXI4-Stream host) interface out

output wire out_tvalid,

input wire out_tready,

output wire [C_OUT_TDATA_WIDTH-1:0] out_tdata ,

output wire [C_OUT_TDATA_WIDTH/8-1:0] out_tkeep ,

output wire out_tlast ,

// Pipe (AXI4-Stream host) interface in

input wire in_tvalid ,

output wire in_tready ,

input wire [C_IN_TDATA_WIDTH-1:0] in_tdata ,

input wire [C_IN_TDATA_WIDTH/8-1:0] in_tkeep ,

input wire in_tlast

);

timeunit 1ps;

timeprecision 1ps;

assign out_tvalid = in_tvalid;

assign out_tkeep = in_tkeep;

assign out_tlast = in_tlast;

assign in_tready = out_tready;

lidar_final inst_lidar (

.clk (ap_clk),

.gatewayin0 (in_tdata[16-1:0]),

.gatewayin1 (in_tdata[32-1:16]),

.gatewayin2 (in_tdata[48-1:32]),

.gatewayin3 (in_tdata[64-1:48]),

.gatewayin4 (in_tdata[80-1:64]),

.gatewayin5 (in_tdata[96-1:80]),

.gatewayin6 (in_tdata[112-1:96]),

.gatewayin7 (in_tdata[128-1:112]),

.gatewayout0 (out_tdata[16-1:0]),

.gatewayout1 (out_tdata[32-1:16]),

.gatewayout2 (out_tdata[48-1:32]),

.gatewayout3 (out_tdata[64-1:48]),

.gatewayout4 (out_tdata[80-1:64]),

.gatewayout5 (out_tdata[96-1:80]),

.gatewayout6 (out_tdata[112-1:96]),

.gatewayout7 (out_tdata[128-1:112])

);

endmodule : myadder1_example

Appendix D – Makefile: Build Kernel Targets and Link Whole Design

# set global variables

export OUT_DIR = ${CURDIR}/output

export RTL_ACCEL = myadder1

PLATFORM = zcu111_axis256

PLATFORM_PATH = ../zcu111_axis256_pkg/output/repo

TEMP_DIR = $(OUT_DIR)/temp

BUILD_DIR = $(OUT_DIR)/build

RM = rm -f

RMDIR = rm -rf

ECHO:= @echo

CP = cp -rf

VPP := v++

# Kernel compiler global settings

VPP_OPTS += -t hw --platform $(PLATFORM_PATH)/$(PLATFORM)/$(PLATFORM).xpfm --save-temps

BINARY_CONTAINERS += $(BUILD_DIR)/myadder.xclbin

BINARY_CONTAINER_myadder_OBJS += ${OUT_DIR}/${RTL_ACCEL}/myadder1.xo

BINARY_CONTAINER_myadder_OBJS += $(TEMP_DIR)/dac_path.xo

BINARY_CONTAINER_myadder_OBJS += $(TEMP_DIR)/adc_path.xo

all: $(BINARY_CONTAINERS)

mine: $(TEMP_DIR)/myadder1.xo

platforminfo -j ./pfm_info.json -p ${PLATFORM_PATH}/${PLATFORM}/${PLATFORM}.xpfm

################################

# Kernel targets

################################

vivado: ${OUT_DIR}/${RTL_ACCEL}/${RTL_ACCEL}.xo

${OUT_DIR}/${RTL_ACCEL}/myadder1.xo:

@echo '************ Building rtl kernel Vivado Project ************'

$(MAKE) -C vv_rtl_kernel vivado

$(TEMP_DIR)/dac_path.xo: ./src/dac_path.cpp ./src/main.hpp

@mkdir -p $(@D)

-@$(RM) $@

$(VPP) $(VPP_OPTS) -c -k dac_path -I"$(<D)" --temp_dir $(TEMP_DIR) --report_dir $(TEMP_DIR)/reports --log_dir $(TEMP_DIR)/logs -o"$@" "$<"

$(TEMP_DIR)/adc_path.xo: ./src/adc_path.cpp ./src/main.hpp

@mkdir -p $(@D)

-@$(RM) $@

$(VPP) $(VPP_OPTS) -c -k adc_path -I"$(<D)" --temp_dir $(TEMP_DIR) --report_dir $(TEMP_DIR)/reports --log_dir $(TEMP_DIR)/logs -o"$@" "$<"

# Building kernel

$(BINARY_CONTAINERS): $(BINARY_CONTAINER_myadder_OBJS) ./myadder.ini

mkdir -p $(BUILD_DIR)

$(VPP) $(VPP_OPTS) -l --config ./myadder.ini --jobs 20 --temp_dir $(BUILD_DIR) -o'$@' $(BINARY_CONTAINER_myadder_OBJS)

# --report_dir $(BIN_CONT_DIR)/reports --log_dir $(BIN_CONT_DIR)/logs --remote_ip_cache $(BIN_CONT_DIR)/ip_cache -o"$@" $(BINARY_CONTAINER_1_OBJS) --sys_config zcu111_axis256

################################

# Clean

################################

.PHONY: myclean

clean:

$(RM) *.log *.jou

$(RM) pfm_info.json

$(RM) myadder.xsa

$(RMDIR) $(OUT_DIR)

ultraclean: myclean

$(RMDIR) ./project_1

$(RMDIR) ./myadder1_ex

.PHONY: clean_vivado

clean_vivado:

$(MAKE) -C vv_rtl_kernel clean

About John Bloomfield

John Bloomfield is a RF and SerDes Specialist Field Applications Engineer (FAE) serving AMD customers in New England and eastern Canada. He has been helping AMD customers with high-speed SerDes since the Virtex 5 days. In the last few years with the addition of analog RF capabilities with our RFSoC family, John now also helps customers to select, design in, and use these devices. Whether its “digital” serdes, or analog data converters, its all about moving those ones and zeros on and off our devices as fast as possible. Prior to AMD, John was an engineer and manager working in video, image processing, and FPGA acceleration. John lives in New Hampshire with his wife and son. He enjoys coffee, being a bit of a foodie, cycling and collecting tools (and using them too).